Abstract

Prominent voices worry that generative artificial intelligence (GenAI) will negatively impact elections worldwide and trigger a misinformation apocalypse. A recurrent fear is that GenAI will make it easier to influence voters and facilitate the creation and dissemination of potent mis- and disinformation. We argue that despite the incredible capabilities of GenAI systems, their influence on election outcomes has been overestimated. Looking back at 2024, the predicted outsized effects of GenAI did not happen and were overshadowed by traditional sources of influence. We review current evidence on the impact of GenAI in the 2024 elections and identify several reasons why the impact of GenAI on elections has been overblown. These include the inherent challenges of mass persuasion, the complexity of media effects and people’s interaction with technology, the difficulty of reaching target audiences, and the limited effectiveness of AI-driven microtargeting in political campaigns. Additionally, we argue that the socioeconomic, cultural, and personal factors that shape voting behavior outweigh the influence of AI-generated content. We further analyze the bifurcated discourse on GenAI’s role in elections, framing it as part of the ongoing “cycle of technology panics.” While acknowledging AI’s risks, such as amplifying social inequalities, we argue that focusing on AI distracts from more structural threats to elections and democracy, including voter disenfranchisement and attacks on election integrity. The paper calls for a recalibration of the narratives around AI and elections, proposing a nuanced approach that considers AI within broader sociopolitical contexts.

Introduction

The increasing public availability of generative artificial intelligence (GenAI) systems, such as OpenAI’s ChatGPT, Google’s Gemini, and a slew of others has led to a resurgence of concerns about the impact of AI and GenAI in public discourse. Leading voices from politics, business, and the media twice listed “adverse outcomes of AI technologies” as having a potentially severe impact in the next two years (together with “mis- and disinformation”) in the World Economic Forum’s Global Risks Reports (2024, 2025). The public is worried as well. A recent survey of eight countries, including Brazil, Japan, the U.K., and the U.S. found that 84 percent of people were concerned about the use of AI to create fake content (Ejaz et al., 2024). Meanwhile, a large survey of AI researchers found that 86 percent were significantly or extremely concerned about AI and the spread of false information, and 79 percent about manipulation of large-scale public opinion trends (Grace et al., 2024). The main worry present in all these contexts is that AI will make it easier to create and target potent mis- and disinformation and propaganda and manipulate voters more effectively. The integration of foundation models, particularly AI chatbots, into various digital media and their growing use for online searches, interaction with information and news, and use as personal assistants is also a growing concern, due to the potential knock-on effects on people’s informedness about politics and their political behavior.

A recurrent theme is the impact of AI on national elections. Initial predictions warned that GenAI would propel the world toward a “tech-enabled Armageddon” (Scott, 2023), where “elections get screwed up” (Verma & Zakrzewski, 2024), and that “anybody who’s not worried [was] not paying attention” (Aspen Digital, 2024). We critically examine these claims against the backdrop of the 2023-2024 global election cycle, during which nearly half of the world’s population had the opportunity to participate in elections, including in high-stakes contests in countries such as the U.S. and Brazil.

We make three contributions: First, we argue that despite widespread predictions of AI-driven electoral manipulation through, for example, deepfakes, as well as AI-informed targeted advertising and misinformation campaigns, the influence of AI on national elections was largely overshadowed by other, much more important factors, such as politicians’ willingness to misinform, lie, and break other norms. We identify several key factors contributing to this discrepancy between alarmist predictions and observed outcomes:

- The inherent challenges of mass persuasion, regardless of the tools employed

- The difficulty of reaching target audiences in an oversaturated information landscape and high-choice media environments

- Emerging evidence regarding the limited effectiveness and use of AI-driven microtargeting in political campaigns

- The complex interplay of socioeconomic, cultural, and personal factors that shape voting behavior, which often outweighs the influence of AI-generated content

- The ways in which people consume information and decide who to trust and who to listen to

We argue that these factors explain why the worst predictions about the role of GenAI in recent national elections did not come to pass and should make us skeptical about claims that GenAI will upend elections in the years to come. Most of this is settled knowledge and based on long scholarly traditions in media effects studies and political science. For these reasons, we were skeptical of the impact of AI on elections even before the broader realization that the AI apocalypse did not occur (Simon et al., 2023).

Second, we provide an overview of the possible reasons for the skewed discourse on AI and elections. We diagnose the alarmist discourse surrounding AI and elections as a version of what Orben (2020) has termed the “Sisyphean cycle of technology panics”—the repeating, ultimately unproductive pattern of public and institutional overreaction to new technologies—with several concurrent push and pull factors within political, technological, regulatory, media, and academic communities fostering this narrative.

Third, we interrogate the possible consequences of a skewed understanding of AI’s impact on national elections and align it with—at times overlooked—other risks to elections and democracy, both from advances in AI and other, more structural and long-standing factors. While acknowledging the potential risks associated with AI in electoral contexts, such as the amplification of existing social inequalities and the erosion of trust in democratic processes, we argue that the disproportionate focus on GenAI may distract from other, more pressing threats to democracy. These include, but are not limited to, forms of voter disenfranchisement; unequal electoral competition, including in the access to digital tools; intimidation of election officials; attacks on journalists and politicians; and various forms of state oppression.

We argue that the current narrative surrounding GenAI’s impact on elections, especially in democratic systems, requires recalibration. We propose a more nuanced approach to understanding the role of AI in electoral processes, one that considers the broader sociopolitical context and avoids both outright technological determinism and extreme social construction of technology arguments. We try to acknowledge the effects of AI within limits, while recognizing the social and political conditions that constrain it.

As such, this paper makes the following contributions to ongoing debates about the impact of AI on democracy:

- Integrating AI into existing theories and within the empirical literature in political communication and political science;

- Staking out a research agenda for the future study of this topic;

- Offering insights for policymakers, journalists, and researchers concerned with preserving the integrity of electoral processes in the “age of GenAI.”

Background: Elections, Mass Persuasion, GenAI

Before focusing on the role of GenAI in elections, we provide a definition and summary of key developments in GenAI, before turning to what elections are and what shapes voting behavior. We then summarize key literature on mass persuasion—all of which will be integral to our later arguments.

Definition of AI and GenAI

AI broadly refers to the development and application of computer systems or machines capable of performing tasks that typically require human intelligence, including learning, reasoning, natural language processing, problem-solving, and decision-making (Mitchell, 2019; Russell & Norvig 2009). The OECD offers a comprehensive definition, describing an AI system as a “machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments” (OECD AI Principles, 2024). These systems vary in their levels of autonomy and adaptability after deployment. Recent advancements in AI have led to the development of “general-purpose AI models”, designed to be adaptable to a wide range of downstream tasks (Bengio et al., 2025), for example through additional fine-tuning. Foundation models are usually large-scale neural networks trained on diverse data sets and capable of performing a wide range of tasks across multiple modalities, including text, code, visuals, and audio. Large language models (LLMs) in particular are a subset of foundation models specialized in text processing and generation (House of Lords Communications and Digital Committee: Large Language Models and Generative AI, 2024).

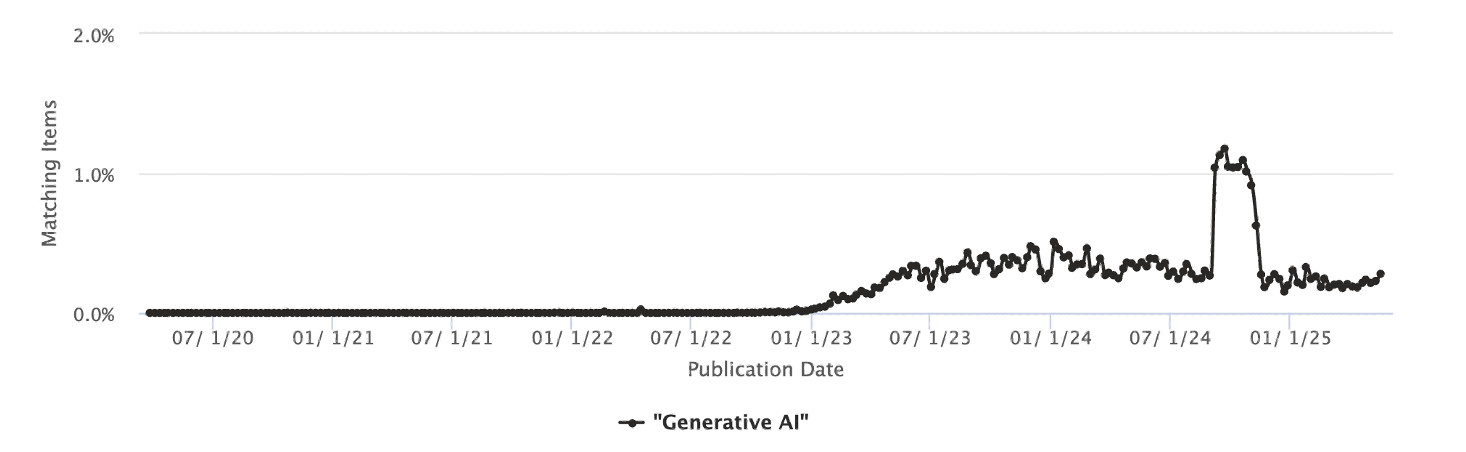

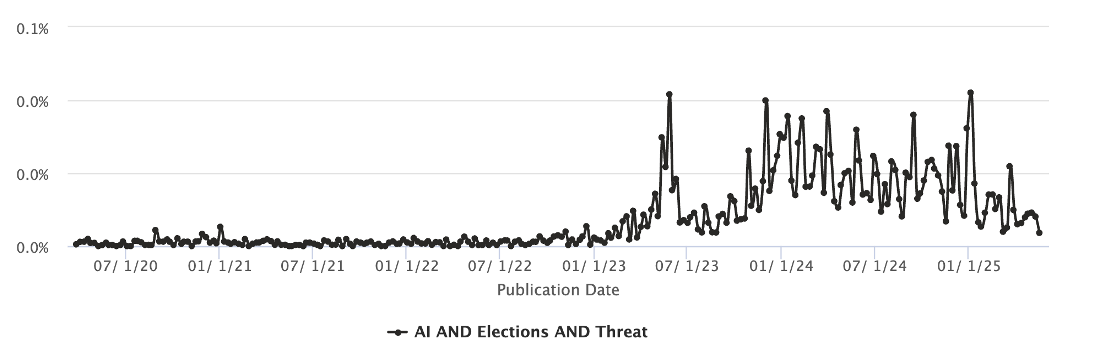

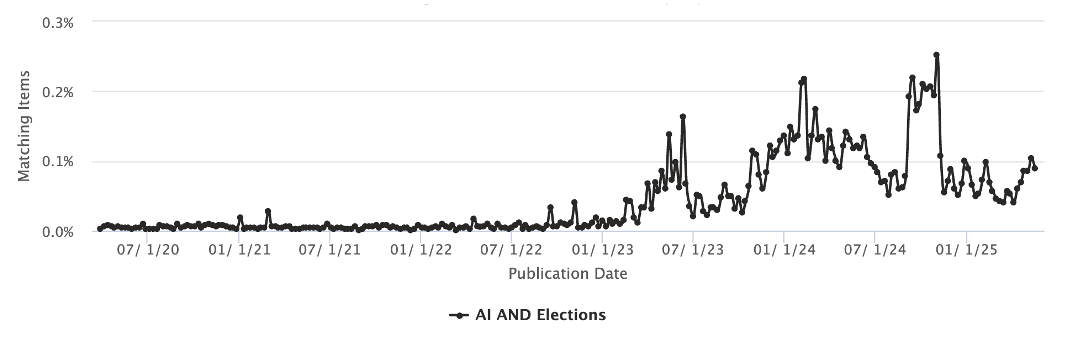

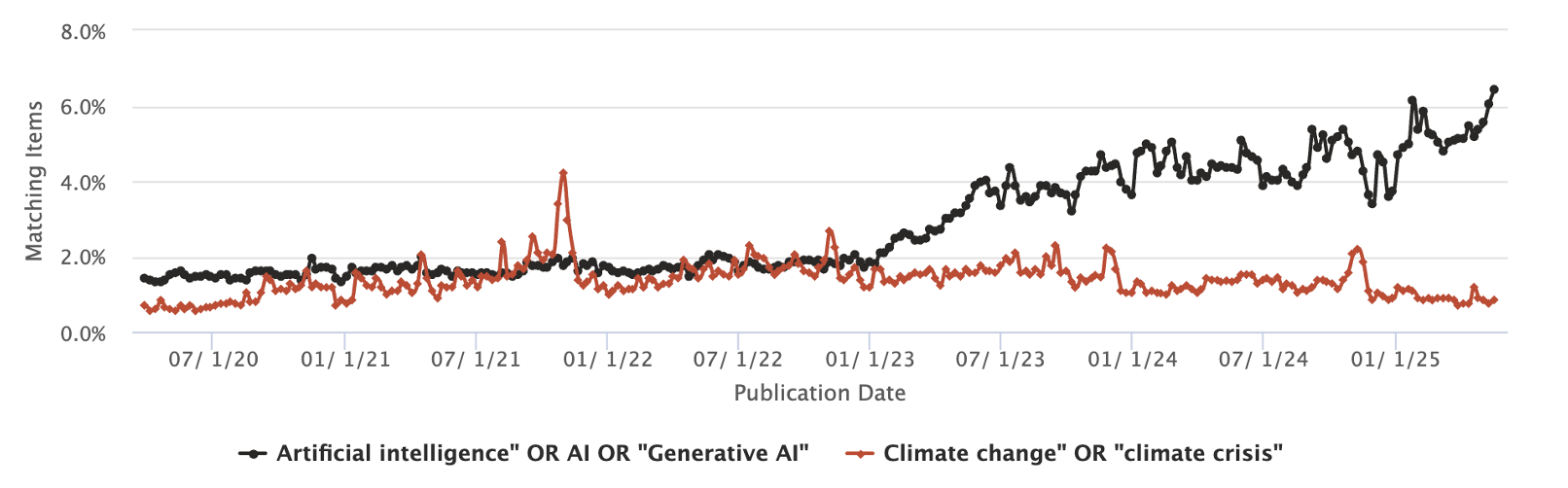

Figure 1. This chart illustrates the percentage of total coverage of stories (weekly) matching the term “Generative AI,” drawn from approximately 1,500 English-speaking media sources. Source: Global English Language Sources database, provided by MediaCloud, spanning the period from April 1, 2020, to May 21, 2025.

The term ‘generative AI’ has seen a substantive rise in coverage since 2022

The term ‘generative AI’ (or ‘GenAI’) specifically became prominent following the public release of the AI system ChatGPT—a chatbot developed by U.S. firm OpenAI—on November 30, 2022. The term loosely refers to AI systems capable of rapidly creating new data, including content across various modalities and formats that is often perceived as indistinguishable from human-generated content (e.g., in the case of text) or content generated with other analog or digital means (e.g., audio or video), depending on the instructions provided. The launch of ChatGPT at the end of 2022 led to a wave of similar releases from major technology companies, including Google and Meta and a range of smaller firms, who introduced their own chatbots and AI models in quick succession. This trend, and the fact that these systems can be accessed and used through natural language interfaces, has significantly expanded the accessibility and usefulness of AI systems for general users.

Elections and voting behavior

We should clarify that this paper focuses on the effects of GenAI in broadly democratic systems. While some of the arguments may be applicable to electoral or closed autocracies, these are not the focus of this article. There is ongoing debate in political science about the exact nature of democracy, a discussion that also falls outside the scope of this paper. Instead, we adopt a minimalist definition that defines democracy as a system of government in which power is vested in the people and rulers are elected through competitive elections. Elections, in this context, are a mechanism by which political conflict in society is channeled into real power over society within an institutional framework for a given amount of time (see also Jungherr, 2023).

People do not approach elections as blank slates, waiting to be persuaded by the latest piece of information or political arguments. Instead, voting behaviors are shaped by a complex nexus of factors, usually a mixture of long-standing predispositions and short-term contextual factors (Campbell et al., 1960; Zaller, 1992). Classic models suggest that partisanship, often formed early in life, serves as a primary filter through which individuals process political information and cast their ballots (Green et al., 2002). Identity-based considerations—such as social group attachments and ideological orientations—can interact with media exposure to shape voters’ impressions of and feelings toward candidates and policy issues (Huddy et al., 2023, Tenenboim-Weinblatt et al., 2022).

Political behavior, in turn, reflects the interplay of these attitudes with external mobilization efforts, social networks, and media frames. For example, socioeconomic status not only correlates strongly with political engagement but also shapes individuals’ sense of political efficacy and their capacity to mobilize effectively (Brady et al., 1995; Oser et al., 2022). Get-out-the-vote campaigns, peer group discussions, and opinion leadership can all prompt individuals to (dis)engage in political activities, such as attending rallies or casting a ballot (Hansen & Pedersen, 2014). In modern contexts, social media platforms intensify this dynamic: They amplify message exposure, facilitate political discussion, and are widely used by political actors to stay informed, disseminate information, and engage voters, while voters actively or passively consume information or participate in political actions on these same platforms. However, not all groups experience these effects equally; systemic factors like socioeconomic status and educational resources can either enable or constrain political participation (Leighley & Nagler, 2013), and not all people have access to or are engaged with digital media where politics and political discourse play out.

The limits of mass persuasion

Political campaigns, along with commercial advertising, represent the most ambitious efforts at mass persuasion. This persuasion can have several aims: increasing or decreasing political participation (e.g., in the form of turnout in general, or for or against a particular side), increasing or decreasing political activism (e.g., raising money or political support during rallies), and shaping voting decisions. Elections are high-stakes events where candidates, parties, and interest groups pour—sometimes enormous—resources into persuading voters. For example, during the 2024 U.S. presidential election, $1.35 billion was spent on online campaign ads on Google and Meta (Brennan Center for Justice, 2024), while more than $15 billion was spent in the whole election cycle (Federal Election Commission, 2025). Despite these massive investments, numerous high-quality studies have shown that the persuasive effects of political advertising are limited in the U.S. (Allcott et al., 2025; Coppock et al., 2020, 2022; Haenschen, 2023) and elsewhere (Hager, 2019). For instance, Kalla and Broockman (2017) write that “the best estimate of the effects of campaign contact and advertising on Americans’ candidates choices in general elections is zero. First, a systematic meta-analysis of 40 field experiments estimates an average effect of zero in general elections. Second, we present nine original field experiments that increase the statistical evidence in the literature about the persuasive effects of personal contact tenfold. These experiments’ average effect is also zero” (p. 1). Increasing statistical power to detect ever smaller effects, an eight-month political advertising campaign on social media delivered to 2 million persuadable voters found that “persuasion campaigns can indeed cause small differential turnout effects—much smaller than pundits and media commentators often assume, but our field experimental study is large enough to show that these effects are distinct from zero” (Aggarwal et al., 2023, p. 335). A large-scale experiment conducted on Facebook and Instagram, which removed political ads for six weeks prior to the 2020 U.S. presidential election, found null effects on political knowledge, polarization, perceived legitimacy of the election, political participation, candidate favorability, and turnout (Allcott et al., 2025).

Research on advertising more broadly has shown that ads have small effects on consumers, far smaller than commonly assumed (DellaVigna & Gentzkow, 2010; Hwang, 2020, Shapiro et al., 2021). For instance, a meta-analysis of 751 short-term and 402 long-term direct-to-consumer brand advertising elasticities showed that “the mean short-term advertising elasticity across all observations (1940-2004) is .12, the median elasticity is .05, and elasticity is declining over time. The finding that advertising elasticity is ‘small’ may upset many practitioners, especially those in the agency business” (Sethuraman et al., 2018, p. 469). “Elasticity” here quantifies how much ‘bang’ the advertiser gets for their ‘buck,’ so to speak, and this bang is surprisingly small. Raising ad expenditure by one percent typically boosted sales by only about one-tenth of a percent, and this was also declining. In other words: Each additional dollar of advertising is buying even less incremental consumer response than it once did. More generally, most attempts at mass persuasion fail to influence people’s behaviors in meaningful ways or have negligible effects when they are not well aligned with their incentives (the things that motivate them), preferences (what they like or dislike), or values (their principles or standards of behavior; Mercier 2020). For instance, public health campaigns encouraging people to eat healthier or quit smoking have little impact compared to making healthy options more affordable or convenient, or increasing the price of cigarettes (Arno & Thomas, 2016; Bader et al., 2011).

The fields of communication and political science have long moved away from models of mass communication which assumed strong, direct effects on public opinion (such as the “hypodermic needle model”). Instead, contemporary research favors more nuanced models of influence—such as agenda-setting and framing—that highlight smaller, indirect effects (Bryant & Oliver, 2009). Research on the limited influence of mass persuasion clashes with the widespread assumption that people are gullible and easily persuaded. It has been well documented that we perceive others to be much more susceptible to negative media effects than ourselves (such as propaganda or misinformation, but also pornographic content; Davison, 1983). This “third-person effect” also plays a central role in fears about the effect of misinformation (Altay & Acerbi, 2024) and may explain why fears about the effect of GenAI on misinformation and persuasion during elections are so widespread. Rather than being overly gullible, people are often quite resistant to changing their views. When people come across new information, they tend to update their beliefs in the direction of the new information, that is, they adjust their views slightly to reflect what they have just learned. However, this updating is often minimal and rarely leads to lasting shifts in attitudes or behaviors (Coppock, 2023), especially in political contexts. For instance, numerous studies have demonstrated that while fact-checking (in itself an attempt at persuading people to hold correct beliefs) reliably reduces misperceptions, it has a negligible influence on political attitudes (e.g., feelings toward a candidate) and behaviors (e.g., voting intentions; Nyan et al., 2019; Porter & Wood, 2024). More broadly, research on social learning shows that people consistently favor their own intuitions, experiences, and beliefs, over information communicated by others – even when they have no reason to trust themselves more than others (Bailey et al., 2023). Rather than being overly influenced by communicated information, we frequently waste it (Morin et al., 2021). Finally, a meta-analysis on news discernment across 40 countries and more than 194,000 participants has shown that people are not gullible: on average people are (very) good at spotting false news (Pfänder & Altay, 2025). Yet, while people can tell true from false news, they tend to be excessively skeptical of true news and to err on the side of skepticism rather than credulity (Pfänder & Altay, 2025)—all of which makes persuasion harder.

A skeptical reader may wonder: What about the effect of repeated exposure to persuasive content over long periods of time? In line with this argument, the literature in experimental psychology has shown that people are more likely to believe repeated claims (Pillai, Fazio, & Effron, 2023). However, there are reasons to doubt that this “illusory-truth effect” translates well outside of experimental settings. For instance, while repeated exposure to false statements professed by Donald Trump increased belief in the statements among Republicans, it decreased belief in the statement among Democrats (Pillai, Kim, & Fazio., 2023). Moreover, the illusory truth effect disappears if the source is not trusted (Orchinik et al., 2024) or if the information environment is perceived as being low-quality (Orchinik et al., 2025). While it is difficult to estimate the causal effect of repeated exposure to persuasive content over time, a large study by Aggarwal et al. (2023), which exposed 1,711,547 persuadable voters to an average of 754 political ads over eight months, nonetheless found minimal impact on voters.

Skeptical readers may also argue that elites, such as politicians, play a dominant role in shaping public opinion. While it is true that they can act as agenda setters, influencing which issues receive attention and how they are framed, this influence is not unidirectional but reciprocal (Gilardi et al., 2022). Elite cues help individuals—especially those with little political knowledge—navigate complex issues by providing simplified narratives and partisan heuristics (Bullock, 2020). These cues can influence policy preferences, but their influence is limited. People stop following party cues if they go too strongly against their self-interest (Slothuus & Bisgaard, 2021) or deviate too much from their own political stances (Mummolo et al., 2019). Moreover, party cues mostly cause people to behave as if they were better informed (i.e., a party cue is often a valid information shortcut; Tappin & McKay, 2021), they do not dominate the policy information people already hold, such that people do not blindly follow party cues, but instead rely on the substance of the policy—even when exposed to party cues (Bullock, 2011, 2020), and finally party cues do not reduce receptivity to arguments and evidence (Tappin et al., 2023). In general, individuals are not passively exposed to information; they actively seek out and engage with content that aligns with their prior beliefs, which limits the media and elites’ ability to profoundly and durably change minds (Arceneaux & Johnson, 2013).

In this section, we have mostly framed persuasion in negative terms, but persuasion is not inherently negative and is an essential element of democracy. It is through mass persuasion that societies build consensus, resolve disputes, and make collective decisions without resorting to force. In many ways, and in specific circumstances, mass persuasion can work. When Russia attacked Ukraine in 2022, or when Queen Elizabeth II died, people quickly updated their beliefs, and acknowledged that the Queen was no longer alive and Ukraine no longer at peace. Similarly, people accept unintuitive scientific knowledge about the formation of continents or the laws of physics. Most of this happens simply because we trust journalists and scientists on these matters and that their positions converge—this trust is largely built through the demonstration of competence and reliability of these experts (Mercier, 2020). Even self-described populists—often assumed to be particularly resistant to expertise—seem to engage similarly. Peresman et al. (2025) found that while populists are less willing to accept expert advice, both populists and non-populists are equally responsive to strong arguments and expert source characteristics—i.e., they are more likely to accept advice when it is supported by strong arguments. GenAI is unlikely to benefit from this trust afforded to experts yet—for instance, only 27 percent of people trust GenAI for news and information about politics, while only seven percent actually use GenAI for news and information about politics (Ejaz et al., 2024). In the future, as AI use grows and LLMs become more accurate, they are likely to benefit from this trust—although their persuasiveness will remain dependent on the external sources they rely on and the perceived agreement with sources that people find reliable.

Mass persuasion can occur and be effective not only when the source is highly trusted but also when people are exposed to strong arguments. For example, most people radically shift their views when shown a clear demonstration of the correct solution to a logical or factual problem (Mercier & Claidière, 2022). LLMs can generate and summarize effective arguments. Growing evidence suggests that discussions with LLMs can significantly change people’s opinions on a wide range of topics: They can reduce belief in conspiracy theories (Costello et al., 2024), reduce concerns about HPV vaccination (Xu et al., 2025), increase pro-climate attitudes (Czarnek et al., 2025), or even reduce prejudice toward undocumented immigrants (Costello et al., 2025). While it is clear that LLMs are persuasive, they are not necessarily more effective than the most effective existing messages (Chen et al., 2025; Hackenburg & Margetts, 2024a; Sehgal et al., 2025). In addition, the cost of exposing audiences to LLMs’ persuasive messages is currently greater than commonly used persuasive methods. As Chen et al. (2025) note, “it is currently much easier to scale traditional campaign persuasion methods than LLM-based persuasion” (p. 1) and “while AI-based persuasion can match human performance on a per-person basis under ideal conditions of forced exposure, its real-world deployment is currently constrained by exposure costs and audience sizes” (p. 13).

If LLMs can be used to persuade people of socially desirable viewpoints, couldn’t bad actors also use them to convince people of harmful or undesirable viewpoints? The answer is ‘yes,’ but early evidence suggests that LLMs are persuasive because they provide factual information and targeted counterarguments (Costello et al., 2025). For instance, while the LLM in the study about conspiracy theories was extremely effective at reducing belief in false conspiracy theories, it did not reduce belief in true conspiracies (such as the MK Ultra covert CIA program; Costello et al., 2024). Thus, on average, LLMs may be more persuasive when advocating for positions that are solidly backed up by evidence. It remains an open question to what extent the persuasiveness of LLMs’ arguments correlates with the factual accuracy of the conclusions they advocate (Jones & Bergen, 2024). There are reasons to think that, in general, people recognize good arguments and are more receptive to factual information (Mercier & Sperber, 2017); however, LLMs are able to cherry-pick facts to advocate for dubious positions. That being said, the evidence discussed here is based on experiments where participants were paid to interact with LLMs. It remains unclear whether people would engage with LLMs in the same way outside of these experimental settings. In addition, people will find reasons to discount information they do not agree with and limit their exposure to such information. For instance, Wikipedia is widely distrusted among conspiracy theorists circles because they perceive it as being politically biased—for better or worse, the same will happen with LLMs.

In sum, people are not gullible, and mass persuasion is difficult, especially when messages do not align with people’s existing incentives, preferences, or values. People are not passively exposed to information and selectively expose themselves to sources they agree with and trust.

Common Arguments about GenAI and Risks to Elections

According to various voices, including some leading AI researchers, GenAI will upend elections and pose a major threat to democracy. These arguments, we suggest, can be divided into six broad categories (Table 1). We do not claim that this list is exhaustive. Instead, it is a categorization of the most common arguments with concerns ranging from the content side (what voters see and hear) to the infrastructure side (the integrity of the voting systems) and the broader societal impact of eroding trust in democratic processes.

Table 1. Six arguments about the assumed negative impact of GenAI on elections.

|

Argument |

Explanation of claim |

Presumed effect |

Source |

|

1. Increased quantity of information and misinformation |

Due to its technical capabilities and ease of use, GenAI can be used to create information at scale with great ease. |

Increased reach of misinformation

Increased reach for political actors Crowding out of other information |

Bell (2023), Fried (2023), Hsu & Thompson (2023), Marcus (2023), Pasternack (2023), Ordonez et al. (2023), Tucker (2023), Zagni & Canetta (2023), Safiullah & Parveen (2022) |

|

2. Increased quality of information and misinformation |

Due to its technical capabilities and ease of use, GenAI can be used to create information or misinformation perceived to be of high quality at low cost. |

Increased persuasion of voters (all information), increased susceptibility of voters (misinformation) |

Fried (2023), Gold & Fischer (2023), Ordonez et al. (2023), Pasternack (2023), Shah & Bender (2023), Zagni & Canetta (2023), Safiullah & Parveen (2022) |

|

3. Increased personalization of information and misinformation at scale |

Due to its technical capabilities and ease of use, GenAI can be used to create high-quality (mis)information at scale personalized to a user’s tastes and preferences. |

Increased persuasion of voters |

Benson (2023), Fried (2023), Hsu & Thompson (2023), Pasternack (2023), Safiullah & Parveen (2022) |

|

4. New modes of information consumption |

The integration of GenAI into existing digital infrastructures and the increasing use of GenAI for information seeking leads to changing consumption patterns around election information. |

Higher likelihood of voters being misinformed through GenAI Provision of lower-quality or biased information about elections Crowding out and long-term undermining of authoritative sources of election information |

Angwin et al. (2024), Marinov (2024), Simon, Fletcher, & Nielsen (2024), Rahman-Jones (2025), Safiullah & Parveen (2022), Jaźwińska & Chandrasekar (2025) |

|

5. Destabilization of reality |

The realism of GenAI content creates uncertainty regarding what is real. |

Fostering undue skepticism toward accurate information Decline in public trust and institutional legitimacy Weaponization to deny inconvenient truths (“liar’s dividend”)

|

Goldstein & Lohn (2024), Dowskin (2024), Carpenter (2024), West & Lo (2024) |

|

6. Human-AI relationships |

Users form deeper, more persistent relationships with personalized and agentic AI systems. |

Increased persuasion of voters Increased misinformation of voters Manipulation of voters |

Knight (2016), France24 (2025), Kirk et al. (2025) |

It should be noted that these categories are not mutually exclusive and overlap in some cases. We use “information” here as a general term that puts a lesser emphasis on the exact mode of delivery (e.g., advertisements in various digital media versus campaign messages published or uttered by candidates). In the section below, we discuss these claims in turn, arguing that current concerns about the effects of GenAI on elections are overblown in light of the available evidence and theoretical considerations. Afterward, we turn to a broader discussion to address common objections and examine the available evidence on how GenAI has been used in recent election cycles.

Addressing the Main Claims about AI Risks around Elections

In the following section, we will address each of the claims outlined above, based on recent evidence and the wealth of preexisting literature on technological change. One challenge in making claims about the role of new technologies in politics is the time lag between their emergence, their initial effects, and the availability of empirical research assessing these effects (see Orben, 2020, p. 1150f). While various empirical studies have examined the effects of GenAI in a political context at the time of writing, further research is needed. However, we argue that adequate expectations for the effects of GenAI can be formed from this new empirical material and the extensive existing literature on the role of digital technologies in elections (see also Dommett & Power, 2024).

1. AI will increase the quantity of information and misinformation around elections

The increase in the quantity of information, and especially misinformation, caused by GenAI uses could have various consequences, from polluting the information environment and crowding out quality information to swaying voters (see Table 1). Below, we dissect this argument.

The first premise is that GenAI will enhance the production of misinformation more than that of reliable information. If GenAI primarily supports the creation of trustworthy content, its overall impact on the information ecosystem would be positive. However, it is difficult to quantify AI’s role in content creation. The ways in which AI is used to support the production of reliable information (Simon, 2025) may be more subtle than AI uses to produce false information, and thus more difficult to detect. For the sake of argument, we assume that AI will be used exclusively to produce misinformation.

The second premise is that AI-generated content not only exists but also reaches people and captures their attention. This is the main bottleneck: For AI misinformation (and for information in general) to have an effect, it must be seen. On its own, (mis)information has no causal power. Yet, attention is a scarce resource, and the amount of information people can meaningfully engage with is finite, because time and attention are finite (Jungherr & Schroeder, 2021), and any piece of politically relevant information has to compete with other types of information, such as entertainment. During elections, voters are already overwhelmed with messages and ads, making any additional content—AI-generated or not—another drop in the ocean. In low-information environments or data voids (boyd & Golebiewski, 2018), where fewer messages circulate, AI-generated content could have a stronger impact. But even in such cases, it is unclear why GenAI content would outperform authentic content and other non-AI-generated content. If anything, the proliferation of AI content may increase the value and demand for authentic content (see the Discussion).

The third premise is that, after reaching its audience, AI content will be persuasive in some way. While strong direct effects on voter preferences are unlikely (see the section on mass persuasion and the determinants of voting), weak and indirect effects are conceivable. Elections are not only about votes but also about the quality of political debate and media coverage. Without swaying voters, AI could flood the information environment in ways that degrade public discourse and democratic processes. We do not find the flooding argument particularly convincing. Since most people rely on a small number of trusted sources for news and politics (Newman et al., 2024; 2025), misleading AI content from less credible sources would likely have limited influence, if it gets seen at all. If mainstream media and trusted news influencers do not misuse AI, why would AI-generated content from untrusted actors cause confusion? Flooding is concerning when there is significant uncertainty about who to trust and when individuals lack control over their information exposure. Yet, in practice, international survey research shows that people still often turn to mainstream media despite low levels of trust and an expanding pool of content creators (Fletcher et al., 2025; Newman et al., 2024; 2025; Strömbäck et al., 2020). In Western democracies, mainstream news outlets have so far shown restraint in their use of GenAI. While some media organizations are using AI to assist in news production and distribution (Simon, 2024, 2025) these uses have generally been transparent and responsible. There is little evidence to suggest that mainstream news organizations are using AI to create misleading content or fake news. In fact, many news organizations are taking steps to ensure that AI-generated content is clearly labeled and that editorial oversight remains in place (Becker et al., 2025). This responsible approach stands in stark contrast to fears that AI could dominate political news coverage and create mass disinformation. Instead, news organizations are leveraging AI tools to enhance journalistic processes, such as fact-checking or summarizing data-heavy reports, rather than misleading their audiences.

The rise of news influencers, too, does not necessarily indicate a breakdown of the information ecosystem, although it presents a shift. In France, for example, HugoDécrypte, the country’s largest news influencer, has grown into a respected media entity with mainstream, high-quality coverage. Trusted sources, whether they are news influencers or news outlets, have strong reputational incentives to appear credible, as their audience’s trust—and their reach—largely depends on it (Altay et al., 2022a). Using AI to mislead—and being exposed by competitors—would be a death sentence for most news sources. These imperfect but powerful reputational incentives largely explain why we generally try to avoid spreading falsehoods despite the ease of writing false text or making false claims (Sperber et al., 2010).

In general, fears about AI-driven increases in misinformation are missing the mark because they focus too heavily on the supply of information and overlook the role of demand. People consume and share (mis)information that aligns with their worldviews and seek out sources that cater to their perspectives (Arceneaux & Johnson, 2013). Motivated reasoning, group identities, and societal conflict have been shown to increase receptivity to misinformation (Mazepus et al., 2023). For example, those with unfavorable views about vaccines are much more likely to visit vaccine-skeptical websites (Guess et al., 2020). Sharing misinformation is often also a political tool, with especially radical-right parties resorting to it “to draw political benefits’” (Törnberg & Chueri, 2025). And some people consume and share false information as a result of social frustrations, seeking to disrupt an “established order that fails to accord them the respect that they feel they personally deserve” and in hopes of gaining status in the process (Petersen et al., 2023). Moreover, people do not even need to believe misinformation deeply to consume and share it, with some people sharing news of questionable accuracy because it has qualities that compensate for its potential inaccuracy, such as being interesting-if-true (Altay et al., 2021). In addition, the people most susceptible to misinformation are not passively exposed to it online; instead, they actively search for it (Motta et al., 2023; Robertson et al., 2023). Misinformation consumers are not unique because of their special access to false content (a difference in supply) but because of their propensity to seek it out (a difference in demand). The fact that demand drives misinformation consumption and sharing is perhaps the most important lesson from the misinformation literature. As Budak et al. write: “In our review of behavioural science research on online misinformation, we document a pattern of low exposure to false and inflammatory content that is concentrated among a narrow fringe with strong motivations to seek out such information” (2024, p. 1).

To wrap up, the presence of more misinformation due to GenAI does not necessarily mean that people will consume more of it. For an increase in supply to translate into additional effects, there must be an unmet demand or a limited supply. Yet, the internet already contains plenty of low-quality content—much of which goes unnoticed (Budak et al., 2024). The barriers to creating and accessing misinformation are already extremely low, and we see no good reason to assume that people will show a higher demand for AI-generated misinformation over existing forms of misinformation. Throughout history, humans have shown a remarkable ability to make up false stories, from urban legends to conspiracy theories. Misinformation about elections is easy to create. All it requires is taking an image out of context, slowing down video footage, or simply saying plainly false things. In these conditions, GenAI content has very little room to operate. Moreover, the demand for misinformation is easy to meet: Misinformation sells as long as it supports the right narrative and resonates with people’s identities, values, and experiences (more on this below).

2. AI will increase the quality of election misinformation

The quality of AI-generated (mis)information has sparked concerns about its potential to deceive people and erode trust in the information environment. By quality, we mean that GenAI enables the creation of text, imagery, audio, and video with such lifelike fidelity that observers cannot reliably distinguish these synthetic creations from material produced through conventional human activity—whether written by an author, photographed with a camera, or recorded with a microphone. Below, we examine this position and argue that while information quality is crucial in many contexts—such as determining guilt in a criminal case—it plays a much smaller role in the acceptance and spread of misinformation, including in the context of elections. The core premise of the argument is that the danger to elections stems from GenAI making election misinformation more successful and impactful by improving its quality—and that AI could facilitate this by lowering the costs of producing high-quality misinformation.

Let us look at this argument step by step. First, it is undoubtedly true that GenAI models can now produce high-quality misinformation. Plausible but false text, audio, and visual material are all within the purview of recent, widely available and usable AI systems. Will these AI systems be used to produce more high-quality misinformation than reliable information? Possibly, not least due to different reputational incentives. News organizations and public-interest organizations depend on audience trust and brand reputation, so overt reliance on AI can backfire due to audience skepticism around AI (Nielsen & Fletcher, 2024; Toff & Simon, 2024) and worries about damage to their trust and reputation from errors AI systems still make. As a result, many outlets are still cautious in their AI use (Borchardt, 2024; Radcliffe, 2025), which curbs its potential to be used too widely for fully AI-generated high-quality, true information. By contrast, misinformation producers face no comparable reputational constraint: faster, cheaper, and less labor-intensive production of high-quality material is an advantage for actors with little to lose from being caught fabricating content. Moreover, professional journalism still enjoys stronger financial and institutional support than most misinformation operations, so the marginal value of additional cost reductions in producing high-quality content is lower for newsrooms than for bad-faith actors. For these reasons, we assume that reductions in the cost and turnaround time of high-quality outputs will confer proportionally greater benefits on misinformation producers than on reliable publishers—though GenAI can and is being harnessed to support responsible journalism as well (Simon, 2025).

However, this does not need to make a significant difference in the context of elections, for various reasons. First, while GenAI undoubtedly enables the creation of more sophisticated false content and might benefit its producers more, it is not clear that higher-quality misinformation would actually be more successful in persuading or misleading people. As we have already discussed, other factors—such as a demand for misinformation, as well as ideological alignment, emotional appeal, resonance with personal experiences, and the source—matter in determining who and why people accept and share misinformation. In other words: It is not just content quality that determines the spread and influence of misinformation. These factors will not be simply overwritten by an increase in content quality, and higher quality does not automatically lead to increased demand for misinformation.

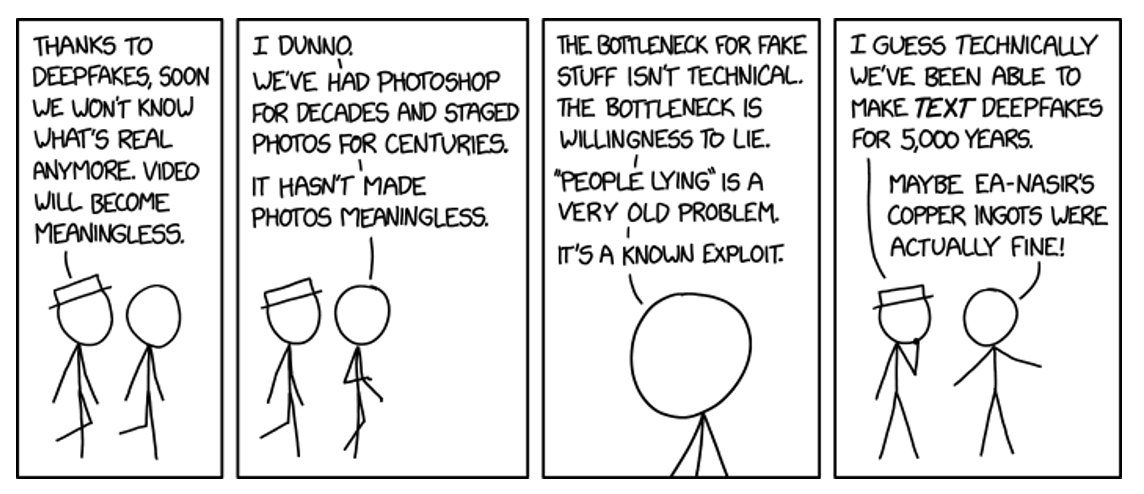

Second, misinformation producers already have numerous tools in their arsenals to enhance content quality but still often resort to low- or even no-tech, low-effort approaches. Image editing tools like Photoshop have long afforded people the ability to convincingly alter images or create new ones (Kapoor & Narayanan, 2024), and “cheap fakes” (Paris & Donovan, 2019), images taken out of context (Brennen et al., 2020), or plain false statements continue to persist and thrive. The main reason is that these basic forms of false or misleading information are ‘good enough’ for their intended purpose, given that they fulfill a demand for false information that is not primarily concerned with the quality of the content but rather with the narrative it supports and the political purpose it serves. In other words: High-quality false information is not even needed. Political actors can simply twist or frame true facts in a way that supports their narratives—a technique adopted with frequency, including by (political) elites. For instance, emotional (true) news stories about individual immigrants committing crimes are often instrumentalized by far right politicians to support unfounded claims about migrant crimes and ultimately to justify anti-immigration narratives (Parasie et al., 2025). Moreover, there are trade-offs between content quality and other dimensions: Higher-resolution video can sometimes look too polished to feel authentic, while more realistic and plausible messages may be less visually arresting—truth is often boring and—getting closer to it may inevitably make content less engaging.

Again, the problem is less the improvement in content quality than the demand for misinformation justifying problematic narratives. The source of misinformation also matters more than the quality of the content (Harris, 2021): A poor quality video taken from an old smartphone shared by the BBC will be much more impactful than a high quality video shared by the median social media user. This is because people trust the BBC to authenticate the video. While GenAI can be used to increase the quality of the content, it can hardly be used to increase the perceived trustworthiness of the source.

In the future, GenAI tools will continue to improve and will certainly allow for more sophisticated attempts at manipulating public opinion. While we should keep an eye out and closely monitor and regulate harmful AI uses in elections, we do not believe that improvement in the quality of AI-generated content will necessarily lead to more effective voter persuasion. Humans have been able to write fake text and tell lies since the dawn of time, but they have found ways to make communication broadly beneficial by holding each other accountable, spreading information about others’ reputations, or punishing liars and rewarding good informants (Sperber et al., 2010). We expect these safeguards to hold even under conditions where content of lifelike fidelity can be created—and is available—at scale.

Figure 2. XKCD comic on the threat posed by deepfakes by Randall Munroe, licensed under CC BY-NC 2.5. Source: https://xkcd.com/2650/.

3. AI will improve the personalization of information and misinformation at scale

A third common argument is that AI could enhance voter persuasion by supercharging the creation of highly personalized (mis)information, including personalized advertisements.

This argument is an extension of the concept of microtargeting to GenAI systems. Its popularity likely originates in the stories about the alleged outsized effectiveness of microtargeting in recent political contests, such as the supposed effects of the voter targeting efforts of political consultancy Cambridge Analytica during the 2016 European Union referendum and the 2016 U.S. presidential election (Jungherr et al., 2020; Simon, 2019). Microtargeting describes a form of online targeted content delivery (for example, as advertising or via users’ in-app feeds). Users’ personal data is analyzed to identify the demographic or “interests of a specific audience or individual,” based on which they are then targeted with personalized messages designed to persuade them (Information Commissioner’s Office, n.d.). While addressing audiences with similar interests (e.g., for certain policy issues) and traits (e.g., age, gender) has been possible for many years and has been actively exploited by political campaigns around the world, it is far too costly to create individual messages for every single individual based on these aspects. GenAI, the argument goes, has removed this constraint, thus allowing for the effective creation of individually personalized and targeted—and thus more persuasive—content at scale.

To understand this, we must briefly examine how GenAI systems enable the creation of personalized content. GenAI systems are pretrained on a large general corpus of data and then refined in a post-training stage by approaches such as reinforcement learning with human feedback (often abbrievated as RLHF). However, current systems are unable to represent the full range of user preferences and values (Kirk et al., 2025). It is also not clear how much information current systems encode about users themselves and how much this shapes (i.e., personalizes) the responses provided to users (even though this is happening to a degree), especially around political content. To our knowledge, OpenAI’s ChatGPT and Google’s Gemini system are the first to incorporate a “memory” of a user’s preferences and potentially use these preferences to shape subsequent responses. Beyond explicit ‘memory’ modules, models can also infer demographic and ideological traits from user prompts (given enough of the same) by leveraging correlations learned during pretraining. For example, experiments show that GPT-4 and similar LLMs are able to guess users’ location, occupation, and other personal information from publicly available texts, e.g., Reddit posts (Staab et al., 2024). This ‘latent inference’ route therefore complements, and may even supersede, memory-based customization, as systems can tailor replies for people ‘like you’ without needing explicit profile data.

In theory, any such system that has information about an individual’s traits and preferences (or is capable of reliably inferring it) can be used to create content more aligned with the user’s worldview and preferences, content that should therefore be more persuasive. Personalized information is more convincing and relevant than nontargeted information (e.g., targeted ads about local events featuring music you like, rather than generic ads) and in many ways GenAI can help personalize information, potentially making it easier to persuade or mislead people.

However, there are several complications to this claim. First, technical feasibility is not the same as actual effectiveness. The effectiveness of politically targeted advertising in general is mixed, with at best small and context-dependent effects (Jungherr et al., 2020; Simon, 2019; Zarouali et al., 2022). Previous studies on microtargeting and personalized political ads reveal that data-driven persuasion strategies often face diminishing returns without broader messaging alignment and credible, on-the-ground campaigning (Kreiss & McGregor, 2018). Experimental evidence from the U.S. further shows diminishing persuasive returns once targeting exceeds a few key attributes (Tappin et al., 2023). Skeptical voices would rightly argue that these findings do not take into account more powerful GenAI systems that can create personalized content at scale in response to customized prompts, all at little cost. However, evidence that the personalized output from AI systems is more persuasive than a generic, nontargeted persuasive message is thin. A recent review of the persuasive effects of LLMs concluded that the “current effects of persuasion are small, however, and it is unclear whether advances in model capabilities and deployment strategies will lead to large increases in effects or an imminent plateau” (Jones & Bergen, 2024, p. 25). Hackenburg and Margetts (2024a, 2024b) found that “while messages generated by GPT-4 were persuasive, in aggregate, the persuasive impact of microtargeted messages was not statistically different from that of nontargeted messages” and that “further scaling model size may not much increase the persuasiveness of static LLM-generated political messages” (Hackenburg et al., 2025). The approach used to study such questions also makes an important difference: Studies measuring the perceived persuasiveness of (text) messages, by asking participants to rate how persuasive they find messages (e.g., Simchon et al., 2024), find much larger effect sizes than more rigorous studies that measure the actual change in participants’ post-treatment attitudes (Hackenburg & Margetts, 2024a; Hackenburg et al., 2025). Similarly, the effect of political ads are much stronger when relying on self-reported measures of persuasion rather than actual persuasion, notably because people rate messages they agree with more favorably (Coppock, 2023). In general, the persuasive effects of political messages are small and likely to remain so in the future, regardless of whether they are (micro)targeted and personalized, because mass persuasion is difficult under most circumstances (Coppock, 2023; Mercier, 2020).

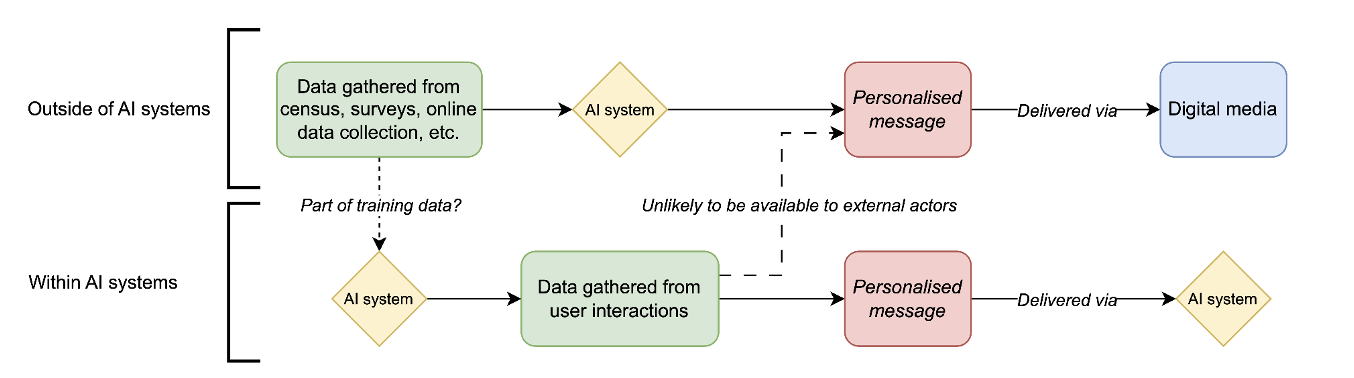

Second, effective message personalization plausibly requires detailed, up-to-date data about each individual. While data collection and data availability about voters is commonplace in many countries (Dommett et al., 2024; Kefford et al., 2022), including general data about traits that shape political attitudes and voting behavior (e.g., race, age, gender, partisan affiliation, voting records) this is not uniform and consistent across countries. As, for example Dommett and colleagues have found, parties in various countries routinely gather voter information via state records, canvassing, commercial purchases, polling, and now online tools, but the depth and type of data they can obtain depend heavily on each country’s legal frameworks, local norms, and the specific access rules of individual jurisdictions. This data access is also shaped by the resources of political actors. Major parties generally have the resources to blend state files, purchased data sets, and digitally captured traces, whereas smaller parties often lack the funds or capacity to canvass intensively or pay for voter lists. Data about individual preferences, psychological attributes, and political views (or data associated with the same) are even more difficult to obtain. Even when user-level data needed for precise political personalization can be obtained, hurdles remain. Data sets can be noisy and incomplete (Dommett et al., 2024; Hersh, 2015). Insufficient temporal validity cannot be ruled out, and weak links between the data and the attempted observed construct (e.g., constructing political views from purchasing behavior) are possible. In addition, unforeseen external shocks not reflected in the data and other unobserved features not captured in the same—which would be meaningful for accurate personalization—compound these issues. These challenges, which already hobble general attempts at predicting human behavior with traditional forms of predictive AI (Narayanan & Kapoor, 2024, p. 29) will likely also complicate efforts to personalize content with GenAI systems in a way that leads to significant attitudinal or behavioral change, limiting the fidelity of any downstream personalized targeting. Again, skeptical voices will argue that the data gathered directly from voters’ interactions with various AI systems (e.g., through the aforementioned memory functions) or inferred from their interactions with the same will be superior in quality. We think it is unlikely that this data would be exempt from these constraints (e.g., temporal validity, external shocks, incompleteness), or that it would become widely accessible outside of AI firms in a way that allows third parties (such as political parties and candidates or actors, or malicious actors) to easily create individually personalized messages that are then also targeted at the right person in various ways (not just within AI systems like chatbots but also in the form of ads or messages on other platforms). It is also unlikely that AI firms would allow such targeted personalization attempts within their own systems (Goldman & Kahn, 2025). Such data access and use would also be prohibited in many countries (see Dommett et al., 2024).

Figure 3. A schematic representation of how personalized messages could be delivered with AI. Illustration courtesy of the authors.

Theoretical pathways for the delivery of messages personalized with AI

Third, to be successful, a personalized message must reach the individual in question. This could happen in two ways (see Figure 3): In the first scenario, the message is delivered as part of the output of a GenAI system itself; for example, as part of a conversation with a user (we discuss this in more detail in Section 6 below). However, so far, no AI or platform company has stated an intention to provide political parties (or other actors with political intentions) with access to their systems to allow them to create and deliver targeted personalized responses at scale to individuals (as was the case, at least to a degree, with Facebook during various political campaigns in the past; Kreiss & McGregor, 2018). Any such move would likely not go unchallenged and be subject to legal restrictions in various countries. In addition, it is questionable that most users would react positively to unsolicited political messages targeted to their preferences from AI systems. In the second delivery scenario, a message is personalized using AI and information gathered about users and then delivered via existing digital platforms that they use daily. However, while GenAI systems (at least theoretically) reduce the cost of creating personalized information, it does not reduce the cost of reaching people individually via such means on these digital platforms. After all, targeting people with tailored messages online does not come for free, and certain audiences can be more expensive to reach and prices differ between political actors (Lambrecht & Tucker, 2019; Votta, Dobber et al., 2024). In addition, as mentioned earlier, attention is a scarce resource. Any piece of politically relevant information—including personalized messages or ads, regardless of their accuracy—must compete with other types of information for peoples’ attention. There is also evidence that the more people encounter ads that try to persuade them, the more skeptical they become of them and the worse they become at actually remembering the messages they have seen (Bell et al., 2022). According to political campaigners, people often do not pay attention to personalized political advertisements (Kahloon & Ramani, 2023). People also not only recognize such personalized messages but also actively dislike those that are excessively tailored (Gahn, 2024; Hersh & Schaffner, 2013) or tailored using certain traits that are considered too personal (Bon et al., 2024, Vliegenthart et al., 2024). Their use can also lead to backlash in favorability if they come from parties that voters do not already agree with (Binder et al., 2022; Chu et al., 2023; Vliegenthart et al., 2024).

Fourth, the growing abundance of voter‐level data and increasingly sophisticated AI tools does not automatically ensure that political actors will deploy them. Past microtargeting efforts offer a telling example: audits of 2020 U.S. Facebook ads reveal that official campaigns used the most granular targeting mainly for highly negative messages, leaving much of the platform’s segmentation potential untapped (Votta et al., 2023). While political campaigns worldwide use targeted advertising, spending is mostly “allocated towards a single targeting criterion” (such as gender) (Votta, Kruschinski, et al., 2024). While wealthier countries and electoral systems with proportional representation see greater amounts of money focused on microtargeting combining multiple criteria (Votta, Kruschinski, et al., 2024), European case studies document legal, budgetary, and cultural constraints that have kept microtargeting in bounds (Dobber et al., 2019; Kruschinski & Haller, 2017). Decisions in political campaigns (and in many other organizations) are also not solely, or even primarily, driven by data or new technological systems (see e.g., Christin, 2021 for the use of metrics in news organizations); instead, human agency and organizational dynamics play a crucial role in determining when and why the same are, or are not, integrated into strategic decision-making (Dommett, personal communication, April 2025). Organizational sociology theory helps explain this gap: Rather than acting as fully rational optimizers, political actors ‘satisfice’ under bounded rationality and established routines. In other words: Political actors do not choose the absolute best option; instead, limited time, information, and ingrained habits lead them to pick the first solution that seems ‘good enough.’ For example, research on recent technology-intensive campaigns in the U.S. shows that party strategists often override model recommendations in favor of gut feeling, coalition politics, or candidate preferences (Kreiss, 2016). Reinforcing this observation, a post-2024-election report from the Democratic-aligned group Higher Ground Labs (HGL Team, 2024) observed that AI never dominated campaigning as some practitioners had predicted; most teams limited the technology to low-stakes tasks such as drafting emails, social posts, and managing event logistics, despite personalization with AI being technically feasible at the time (at least in theory). Only a handful ventured into more sophisticated uses like predictive modeling, large-scale data analysis, or real-time voter engagement, and even then, adoption was typically the result of individual experimentation rather than a structured organizational rollout. The report stresses that many staffers simply lacked the know-how to push GenAI further and found little institutional guidance to help them do so, illustrating how entrenched routines, uneven skills, and weak organizational support continue to constrain the political uptake of advanced technologies (HGL Team, 2024). A more recent survey found that while AI use among political consultants in the U.S. is growing, it is mostly used for mundane admin tasks (Greenwood, 2025). All this is unlikely to be different for techniques enabling personalized messaging with the help of generative AI systems. In short, the beliefs, capacities, and priorities of political actors remain bottlenecks between what data and GenAI theoretically make possible in terms of personalization and what political campaigns actually do.

Fifth, and finally, the overall argument assumes that GenAI will be used to mislead more than to inform. However, AI chatbots will also be used by governments, institutions, and news organizations to inform citizens and provide them with personalized and reliable information (we discuss this further in the earlier section on mass persuasion). Personalized targeting with information is also not inherently anti-democratic;pluralist and deliberative theories of democracies hold that citizens participate most effectively when they receive information and representation that is salient to their lived interests and identities(Dahl, 1989; Mansbridge, 1999). In this sense, AI-enabled tailoring can also fit into the long-standing democratic practice whereby parties canvass different interest groups with messages that match their specific concerns and build coalitions around such issues. Such tools could in theory also expand informational equality by delivering high-quality, language-appropriate content to communities that mainstream media often underserve, including linguistic minorities, first-time voters, and rural electorates (see e.g., Vaccari & Valeriani, 2021). The democratic question is therefore less about whether personalization occurs and more about whether citizens retain exposure to diverse viewpoints and qualitative information.

4. New modes of information consumption

A fourth argument that GenAI spells trouble for elections is that the integration of GenAI systems into existing digital infrastructures such as social media and online search and the increasing use of GenAI for information seeking leads to changing consumption patterns around election information. This, in turn, could present several risks. First, that voters are more likely to be misinformed because these systems provide them with incorrect information (regardless of why they do so). Second, that they provide voters with lower-quality or biased information in the context of elections. Third, that their use crowds out or otherwise undermines authoritative sources of information, including about elections.

As explained earlier, elections are mechanisms by which political conflict in society is channeled into real power over society. They are also a mechanism of control, allowing voters to vote out of office candidates that do not perform or do not match their preferences. Central to this is that voters are able to evaluate politicians. As Jungherr et al. write: “To keep incumbents accountable, voters need to know what politicians do; to select from among all candidates the one that will best represent them, voters need information on what politicians want” (2020, p. 216). The availability of information, and information from independent news media, are critical in this context—and a range of studies has shown a link between a better-informed public and better performance of politicians (Besley & Burgess, 2002; Brunetti & Weder, 2003; Freille et al., 2007; Snyder & Strömberg, 2010).

Increasingly, GenAI is used to provide people with news, or news-like information, including about elections. At the time of writing, GenAI has been integrated into online search engines (Google, Microsoft Bing) and social media platforms (Meta’s Facebook, Instagram, WhatsApp, and Messenger, as well as X), in addition to being widely available as stand-alone chatbots. As survey research in 2024 from Argentina, Denmark, France, Japan, the U.K., and the U.S. shows, GenAI sees increasing use, with 24 percent of respondents reporting that they used GenAI for getting information, although just five percent said they had used GenAI to get the latest news (Fletcher & Nielsen, 2024). With the growing adoption and integration of GenAI, these numbers will very likely increase over time. The latest results from the 2025 Digital News Report showed that this number has risen to seven percent at the time of writing (Newman et al., 2025).

However, GenAI, particularly chatbots, can produce plausible-looking information about elections that is partially or entirely false or misleading. For example, news outlet Proof News and the Institute for Advanced Study at Princeton University found in February 2024 that answers to questions about the 2024 U.S. election from five different AI models “were often inaccurate, misleading, and even downright harmful” (Angwin et al., 2024), with similar findings reported for three chatbots for the 2024 EU parliamentary elections (Marinov, 2024; Simon et al., 2024), although another study (Simon, Fletcher, & Nielsen, 2024) found somewhat better results for the 2024 U.K. general election. Meanwhile, internal research by the BBC found issues with inaccuracies and distorted content in the representation of news content, and BBC content in particular (Rahman-Jones, 2025), something also found by Jaźwińska and Chandrasekar (2025) who found that leading GenAI systems frequently misrepresented news content.

Given the likelihood that more people will use such systems as sources of news, and given these systems’ weaknesses, a current fear seems to be that users are more likely to be misinformed (potentially misinforming others in turn), because these systems provide them with incorrect information (regardless of why they do so), for example around elections. Particularly problematic here is that GenAI systems often only provide a single answer, which differs from search results, where users are presented with a range of options to pick from. They also can provide factually incorrect information with the same certainty as factually correct information. However, there are several reasons why the impact of this—especially around elections—might not be as severe as feared. First, receiving plausible-sounding (but potentially incorrect answers) from a GenAI system does not significantly differ from communication flows in everyday life. Statements of friends, family, or colleagues routinely include inaccuracies, yet people generally navigate these situations with ease without dramatically altering their (political) views (i.e., people are epistemically vigilant; Mercier, 2020; Sperber et al., 2010). This suggests that while a single incorrect statement generated by a chatbot might have an impact in certain contexts, it is not fundamentally different from other sources of error-laden or biased information encountered through normal social and media interactions. In addition, as we have already argued, factors such as partisan identity mediate the impact of new information voters receive on attitudes and behaviors. Second, generative systems can be—and already have been—deliberately designed to mitigate such risks. Google’s Search Generated Answers, for example, do not provide answers for certain topics at all and otherwise provide users with links to sources (although it is still unclear to what extent users will interrogate these, with a growing number of accounts indicating that users often do not click through to underlying sources). Emerging models could incorporate further features that enable users to consult original, authoritative sources or weigh competing accounts, thereby reducing the likelihood of uncritical acceptance of misleading statements. They could also be designed to provide blanket template responses to particularly important questions (‘When is election day?’). Third, incidental exposure to diverse information remains common across digital and offline networks (Ross Arguedas et al., 2022; Vaccarie & Valeriani, 2021), minimizing the chance that a single chatbot response will decisively shape political attitudes.

A second concern around the increasing use of GenAI for information consumption relates to the idea that it will provide users—and thus voters—with lower-quality or biased information in the context of elections. This concern does not revolve primarily around the factual accuracy of responses but issues such as the plurality of views presented (e.g., voters receiving only information on the views of one party, instead of several points of view), as well as the level of detail and nuance (e.g., sensational content that oversimplifies a complex issue relevant to a given election), and the reflection of minority views in output (Jungherr, 2023). Third, and relatedly, the increasing use of AI for information and news could end up crowding out other authoritative sources of information (because they are not represented in the output) or otherwise undermine them (for example, because the creation of products competing with those of news organizations weakens their economic position), with negative downstream effects for the information available about and during elections. While none of these points have so far been empirically validated, these second-order effects could be problematic—especially for democratic life at large, given that access to quality information and news helps people be more informed and can play a role in increasing resilience to misinformation (Altay 2024; Humprecht et al., 2020)—even where they do not have major effects during elections, given how people’s existing attitudes and cognitive biases shape their electoral behavior, as explained earlier.

5. Destabilization of reality

A fifth argument around AI and elections states that the ability to create realistic-looking content with GenAI will sow confusion and uncertainty about what is real, which could in turn increase skepticism toward accurate information, diminish trust in accurate information from reliable sources such as quality news outlets and experts, and be weaponized to deny inconvenient truths (‘This footage is not real, it’s AI!’), also known as the liar’s dividend.

GenAI can indeed be used to create highly realistic images, videos, and audio. The possibility that any realistic-looking content may be AI-generated could make people more skeptical, especially when it comes from sources they do not know or trust. This heightened skepticism has not been properly empirically documented yet, although a first, small-scale experimental study in Germany showed that exposure to deepfakes significantly decreased the general credibility attributed to all types of media, even after participants were told the content was fake. The study also found that people became less confident in their ability to discern between real and fake media, regardless of whether they were exposed to authentic or manipulated content (Weikman et al., 2024). Anecdotally, we have seen a handful of instances where people have become more skeptical—for example around images of nature on social media, or viral entertainment videos, as well as in complaints about the increase in so-called AI slop (low-quality, AI-generated content).

Yet, claims that this development will foster widespread skepticism toward all kinds of accurate information have to be taken with a pinch of salt, given the complexity of how people evaluate information. Individuals filter political information through existing mental models shaped by their political socialization and position (see the earlier background section for reference). They also rely on more than just the overt realism of a message to assess it. Instead, audiences also consider preexisting familiarity with the source and the source’s credibility. As, for example, Harris (2021) argues for the case of videos, these derive their evidentiary power not solely from their quality or content “but also from its source. An audience may find even the most realistic video evidence unconvincing when it is delivered by a dubious source. At the same time, an audience may find even weak video evidence compelling so long as it is delivered by a trusted source.” In addition, subtle inconsistencies in modality or content, audiences’ own preexisting knowledge of a topic or claim, and the broader context in which the information is presented all play a role (Hameleers et al., 2023; Mercier, 2020). Individuals often exhibit a healthy degree of scrutiny and critical thinking when confronted with novel content, even from a young age (Harris, 2012; Sperber et al., 2010). Historical precedents such as staged photographs, heavily edited political broadcasts, or the introduction and widespread availability of Photoshop and similar editing software have likewise raised concerns about media authenticity, yet society has repeatedly developed new norms and tools to discern and counter manipulation (Habgood-Coote, 2023) and largely seems to have retained trust in depictions of reality. Moreover, ongoing advancements in digital literacy programs and AI-detection technologies could bolster the public’s and experts’ capacity to identify and reject manipulated media. To date, there is little evidence to suggest that the rise of GenAI has escalated into widespread doubt about the legitimacy of all information.

The suspicion and uncertainty that any content may be AI-generated, and the difficulty in some cases of detecting it, will inevitably shape norms and perceptions of information online. It is possible that general levels of skepticism and distrust in new information encountered online will rise. However, while the added noise to the information environment will be detrimental, established providers of reliable information might not see much of an effect at all. Trust in institutions and news is driven by a plethora of factors (partisanship, habituation, rituals of trustworthiness such as transparency, see Fawzi et al., 2021 for an overview), many of which are not directly affected by AI or the existence of AI content at all. On the contrary, rather than becoming universally skeptical of all content, people may become more selective about whom they trust, and gravitate even more strongly toward sources they consider authoritative or authentic. Many information channels favored by youth, such as TikTok, Snapchat, or Twitch, already promote such informal, on-the-fly, personal, even intimate content, where audiences create—sometimes strong—parasocial relationships with content creators. Doubtlessly, these sources may still disseminate misleading or false information, but this is not fundamentally an AI issue. Rather, it is a characteristic of high-choice media environments, which allow for the existence and proliferation of a plurality of sources of different quality, standards, and formats.